Closed Form Solution For Ridge Regression

Closed Form Solution For Ridge Regression - I lasso performs variable selection in the linear model i has no closed form solution (quadratic programming from convex optimization) i as increases,. Web ridge regression is motivated by a constrained minimization problem, which can be formulated as follows: This can be shown to be true. Part of the book series: $$ \hat \theta_ {ridge} = argmin_ {\theta \in \mathbb. The corresponding classifier is called discriminative ridge machine (drm).

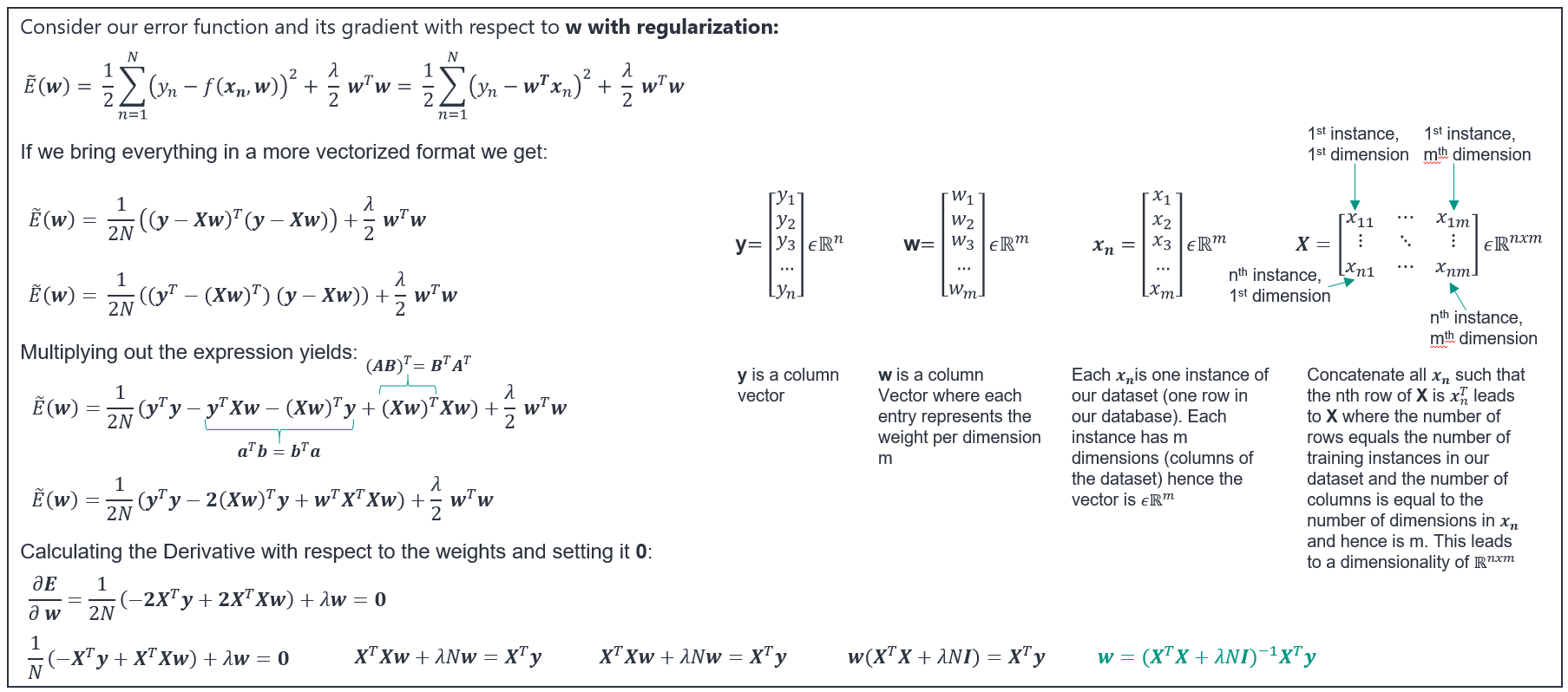

If the the matrix (xtx + λi) is invertible, then the ridge regression estimate is given by ˆw = (xtx + λi) − 1xty. Wlist = [] # get normal form of. Our methods constitute a simple and novel approach. The intercept and coef of the fit. Web lasso performs variable selection in the linear model.

Show th at the ridge optimization problem has the closed f orm solutio n. Lecture notes in computer science ( (lnsc,volume 12716)) abstract. Web in addition, we also have the following closed form for the solution. Modified 3 years, 6 months ago. Web closed form solution for ridge regression.

The corresponding classifier is called discriminative ridge machine (drm). Our methods constitute a simple and novel approach. Web in addition, we also have the following closed form for the solution. Modified 3 years, 6 months ago. This can be shown to be true.

I lasso performs variable selection in the linear model i has no closed form solution (quadratic programming from convex optimization) i as increases,. In this paper we present. Web closed form solution for ridge regression. Web first, i would modify your ridge regression to look like the following: Modified 3 years, 6 months ago.

In this paper we present. Web this video demonstrate how to easily derive the closed form solution in ridge regression model.if you like our videos, please subscribe to our channel.check. W = (xx⊤)−1xy⊤ w = ( x x ⊤) − 1 x y ⊤ where x = [x1,.,xn] x = [ x 1,., x n]. The corresponding classifier is called.

However, there is relatively little research. Lecture notes in computer science ( (lnsc,volume 12716)) abstract. Another way to look at the problem is to see the equivalence between fridge(β, λ) and fols(β) = (y − βtx)t(y − βtx) constrained to | | β | | 22 ≤ t. Web ridge regression (a.k.a l 2 regularization) tuning parameter = balance of.

Part of the book series: Web ols can be optimized with gradient descent, newton's method, or in closed form. A special case we focus on a quadratic model that admits. W = (xx⊤)−1xy⊤ w = ( x x ⊤) − 1 x y ⊤ where x = [x1,.,xn] x = [ x 1,., x n]. Web ridge regression is motivated.

I lasso performs variable selection in the linear model i has no closed form solution (quadratic programming from convex optimization) i as increases,. If the the matrix (xtx + λi) is invertible, then the ridge regression estimate is given by ˆw = (xtx + λi) − 1xty. Our methods constitute a simple and novel approach. W = (xx⊤)−1xy⊤ w =.

$$ \hat \theta_ {ridge} = argmin_ {\theta \in \mathbb. This can be shown to be true. I lasso performs variable selection in the linear model i has no closed form solution (quadratic programming from convex optimization) i as increases,. Modified 3 years, 6 months ago. Web that is, the solution is a global minimum only if fridge(β, λ) is strictly.

Closed Form Solution For Ridge Regression - Lecture notes in computer science ( (lnsc,volume 12716)) abstract. Web in addition, we also have the following closed form for the solution. However, there is relatively little research. In this paper we present. If the the matrix (xtx + λi) is invertible, then the ridge regression estimate is given by ˆw = (xtx + λi) − 1xty. I lasso performs variable selection in the linear model i has no closed form solution (quadratic programming from convex optimization) i as increases,. Another way to look at the problem is to see the equivalence between fridge(β, λ) and fols(β) = (y − βtx)t(y − βtx) constrained to | | β | | 22 ≤ t. Part of the book series: Web ridge regression (a.k.a l 2 regularization) tuning parameter = balance of fit and magnitude 2 20 cse 446: Asked 3 years, 10 months ago.

Web ridge regression is motivated by a constrained minimization problem, which can be formulated as follows: Part of the book series: W = (xx⊤)−1xy⊤ w = ( x x ⊤) − 1 x y ⊤ where x = [x1,.,xn] x = [ x 1,., x n]. Show th at the ridge optimization problem has the closed f orm solutio n. If the the matrix (xtx + λi) is invertible, then the ridge regression estimate is given by ˆw = (xtx + λi) − 1xty.

Web ridge regression (a.k.a l 2 regularization) tuning parameter = balance of fit and magnitude 2 20 cse 446: In this paper we present. The corresponding classifier is called discriminative ridge machine (drm). $$ \hat \theta_ {ridge} = argmin_ {\theta \in \mathbb.

This can be shown to be true. Web lasso performs variable selection in the linear model. Web ols can be optimized with gradient descent, newton's method, or in closed form.

A special case we focus on a quadratic model that admits. Web ridge regression is motivated by a constrained minimization problem, which can be formulated as follows: $$ \hat \theta_ {ridge} = argmin_ {\theta \in \mathbb.

Modified 3 Years, 6 Months Ago.

Web ridge regression (a.k.a l 2 regularization) tuning parameter = balance of fit and magnitude 2 20 cse 446: A special case we focus on a quadratic model that admits. Web this video demonstrate how to easily derive the closed form solution in ridge regression model.if you like our videos, please subscribe to our channel.check. The corresponding classifier is called discriminative ridge machine (drm).

If The The Matrix (Xtx + Λi) Is Invertible, Then The Ridge Regression Estimate Is Given By ˆW = (Xtx + Λi) − 1Xty.

Web closed form solution for ridge regression. However, there is relatively little research. Asked 3 years, 10 months ago. Web first, i would modify your ridge regression to look like the following:

Web In Addition, We Also Have The Following Closed Form For The Solution.

Lecture notes in computer science ( (lnsc,volume 12716)) abstract. I lasso performs variable selection in the linear model i has no closed form solution (quadratic programming from convex optimization) i as increases,. Another way to look at the problem is to see the equivalence between fridge(β, λ) and fols(β) = (y − βtx)t(y − βtx) constrained to | | β | | 22 ≤ t. $$ \hat \theta_ {ridge} = argmin_ {\theta \in \mathbb.

Web That Is, The Solution Is A Global Minimum Only If Fridge(Β, Λ) Is Strictly Convex.

Web ols can be optimized with gradient descent, newton's method, or in closed form. Part of the book series: The intercept and coef of the fit. Wlist = [] # get normal form of.